You have likely already come across the term TEI in digital humanities circles. Perhaps you have (like me) downloaded a TEI edition of a text before, but have not known how to read it or what to do with it. You may have (also like me) even deployed the term in casual conversation without completely understanding what TEI is or what it does.

This post aims to provide you with a concise and basic introduction to TEI and XML markup. I hope to provide definitions for all technical terms and to point you to useful resources to reference either when using TEI-encoded texts or when you eventually create your own. I have also included some step-by-step instructions on how to create an XML markup of a text in the last section of this post.

- What is TEI?

TEI is an acronym for the Text Encoding Initiative. The “text” here is clear enough for the time being. But what about “encoding?” All text that we view on a website is encoded with information that affects the way we see the text represented, or the way in which your computer sorts and interprets the text. There are many different languages in which one can encode text.

Because there is no single agreed-upon format or standard for encoding text, a consortium of humanists, social scientists and linguists founded the TEI in 1994 to standardize the way in which we encode text. It has since become a hugely popular standard for rendering text digitally and is still widely used today.

- What is XML?

Most versions of the TEI standards (of which there are a few) make use of XML: Extensible Markup Language. XML is a text-based coding metalanguage similar to HTML (in fact, both are markup languages, hence the “ML” at the end of each acronym) that, like the standards of TEI, has undergone several changes and updates over the years.

XML documents contain information and meta-information that are expressed through tags. The tags are similar to those used in HTML, if you are familiar with these. Below is a brief example of an XML document:

<p>the quick brown fox</p>

<!–this is a test–>

<p>jumps over the lazy dog</p>

The letters bounded by these symbols “< >” above are tags. In this case, the tag being used is “<p>” which is used to separate paragraphs. All tags must be both opened and closed, or your code will not work. “<p>” is the opening tag and “</p>” is the closing; all tags are closed in the same way. The text in between the opening and closing tags is what will be encoded.

To insert comments into your XML document that will not have any bearing on the function of your code, follow the format found on the middle line of the document above, i.e. <!–YOUR TEXT HERE –>

- Why know or use TEI and XML?

Before we delve into the actual process of creating a simple XML markup of a text, you might already be wondering precisely how learning a bit of XML and TEI will be beneficial to your scholarship.

There are a few ways in which we can envision the uses of a working knowledge of XML and TEI. First and foremost among these is the possibility of you creating and disseminating your own TEI editions of texts – perhaps a transcription of a manuscript few others have seen, or handwritten notes you have uncovered in your archival research. In this case, you can let your more tech-savvy colleagues add your editions to their corpus – to query and explore the documents in any way they see fit.

As humanities scholars in the twenty-first century, we often find ourselves asking broad and provocative questions about our disciplines and our work. One particularly captivating question has always been about the nature of what we call “the text.” The digitization of texts has provided this line of questioning renewed energy, and a basic understanding of the encoding process would also arm you with the vocabulary to take part in this conversation.

A final use of TEI and XML would involve you querying documents created by others. There are an enormous number of TEI editions of texts available freely online, and the more comfortable you are with code, the more you can do with them. One deceptively simple way of visualizing your XML code is turning it into a spreadsheet using Microsoft Excel. This is especially useful if you wish to add data from a TEI edition into a database for your thesis or dissertation. To do this in Excel, simply select the “data” tab on the top utility bar and specify “from other sources” before selecting your XML file.

- Finally, actually doing TEI and XML.

In this section, I will quickly walk you through the process of creating a simple XML document in accordance with the standards of TEI.

-What you will need

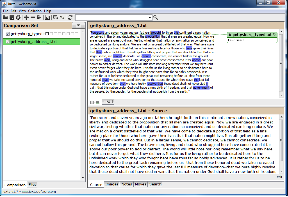

A plain text editing software is the most important tool you are going to need. Atom is a wonderful free software that works well on Macs, as is Sublime Text 2. When saved as an XML file, your tags should appear in color, making the markup process easier, and making it more noticeable when you have forgotten to close a tag. You will also need a plain text copy of the text you are working with.

-Tags

We have already gone over the basics of tags above. Another key issue here is deciding which tags to use, i.e. which tags are useful for the goals of your project or edition. These tags and their correct formatting can be found online (look http://www.tei-c.org/release/doc/tei-p5-doc/en/html/ref-tag.html for a list of element tags) but a cursory list of common ones can be found below:

<p>; <body> ; <l> ; <name> ; <placeName> ; <abbr> ; <head> ; <quote> ; <date> ; <time>

Once you have tagged all the elements you wish to tag in your text (making sure to close all of your tags!) you have to add a TEI header in order to ensure that your document adheres to the TEI standards. Examples of TEI headers can be found here: http://www.tei-c.org/release/doc/tei-p5-doc/en/html/HD.html

-Get Validated!

A useful resource for checking your code can be found here: http://teibyexample.org/xquery/TBEvalidator.xq

This validator will let you know whether your code follows the TEI standards, and if not, where your mistakes can be found.

- Further Reading and Resources

There are lots of online resources for TEI and XML, but here are a few of my personal favorites:

http://www.tei-c.org/index.xml

https://wiki.dlib.indiana.edu/display/ETDC/TEI+Tutorial

ftp://ftp.uic.edu/pub/tei/teiteach/slides/louslide.html

Alexander Profaci is an outgoing MA student in Medieval Studies at Fordham University. He will be entering the PhD program in History at Johns Hopkins University in the fall. Follow him on Twitter @icaforp.